A Novel Parallel Execution Model for Many-core Architectures

The System Software Research Team at RIKEN AICS has developed a novel parallel execution model, named PVAS, for many-core architectures. This research and development project was supported by JST/CREST (“Development of System Software Technologies for post-Peta Scale High Performance Computing” research area) with the collaboration of the research and development group of the Storage Research Dept. Center for Technology Innovation-Information and Telecommunications, Storage Division of R&D Group, Hitachi, Ltd. and the Department of Information and Computer Science of Keio University. This technology is expected to make technical simulations such as climate and disaster run faster with reduced memory consumption. It is expected that this will make it possible to resolve the social problems we are facing in more efficient ways.

Today, many-core architectures are being used for many supercomputers. With many-core architectures, many tasks can run on a computer in parallel. Parallel computation takes place in such a way that many tasks exchange data with others. This data exchange is the key to the efficiency of parallel execution with many-core architectures.

In the conventional parallel execution model using processes, for example, data owned by other processes cannot be accessed and this limits the performance of parallel computation. With the proposed PVAS execution model, however, it is possible to access data owned by other tasks. PVAS is implemented on the Linux operating system. Evaluations using a many-core architecture showed that MPI performance is improved and a benchmark program can run with 20% improvement. PVAS was also ported to McKernel[1] to promote the development of applications using the PVAS execution model.

[1]"McKernel" is the name of the light weight OS kernel for many-core architectures. McKernel will be deployed to the Oakforest-PACS supercomputer of the Joint Center for Advanced High Performance Computing (JCAHPC) and to post K supercomputer being developed by RIKEN under the FLAGSHIP 2020 Project backed by the Ministry of Education, Culture, Sports, Science and Technology, Japan.

More detail about McKernel.

Research Team

Yutaka Ishikawa

Team Leader

System Software Research Team

RIKEN Advanced Institute for Computational Science

Kenta Shiga

Director

Storage Research Dept.

Center for Technology Innovation-Information and Telecommunications,

Storage Division of R&D Group

Hitachi, Ltd.

Kenji Kono

Professor

Department of Information and Computer Science

Keio University

Background

Many-core architectures have been chosen for many newly developed supercomputers. It would be desirable to have a novel parallel execution model which is suitable for many-core architectures for technical computing and big-data processing, being able to run faster with lower memory consumption than that of conventional execution models.

Technical Details

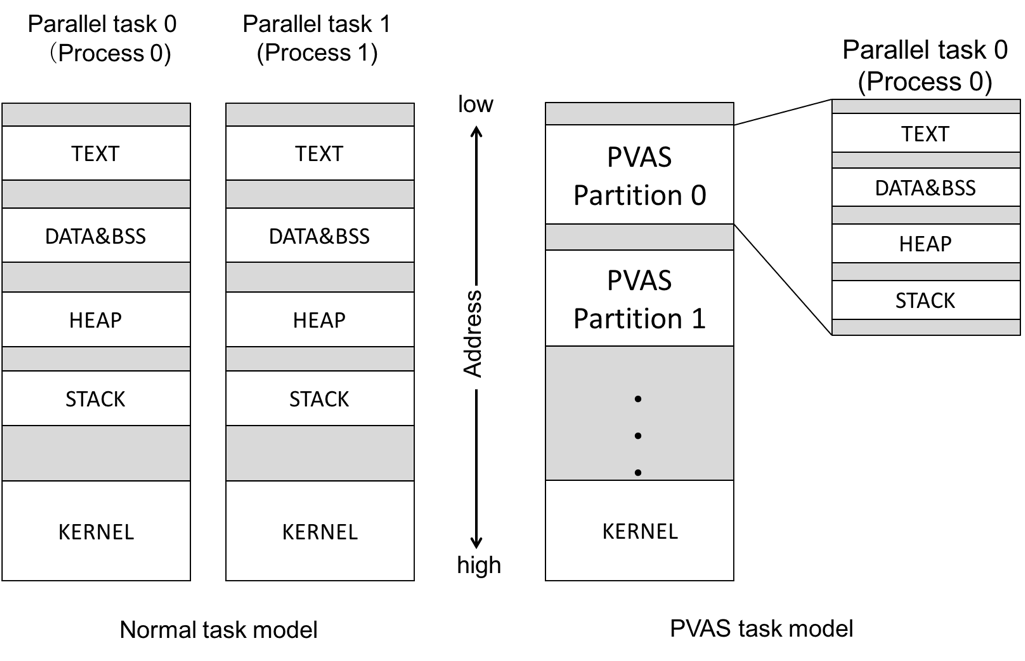

Conventional parallel execution models are based on the use of multiple processes or multiple threads. With the multiple process model, processes cannot access data owned by other processes. This hinders data exchange between processes. And with the multiple thread model, data must be protected from being accessed by different threads simultaneously. This mutual exclusion also impedes execution efficiency. Furthermore, it is anticipated that the amount of memory per core will decrease, and a new parallel execution model with small memory footprint will be desirable.

Under the proposed parallel execution model, tasks, which are a unit of parallel execution, can access the data owned by other tasks, but the data are not shared between the tasks. As a consequence, the advantages of multiple process and multiple thread execution models are incorporated. It becomes possible to implement parallel execution environments that can achieve both high-performance and low memory consumption.

Figure 1: Address space comparison between conventional model and the PVAS model

This proposed parallel execution model is named Partitioned Virtual Address Space (PVAS). As shown in Figure 1, multiple tasks can run in the same virtual address space unlike under the multiple process model. A virtual address space is partitioned and a task runs one of the partitions. We applied PVAS to the implementation of Massage Passing Interface (MPI). Our evaluation on a computer with a many-core architecture showed that a benchmark program can run up to 20% or better. Another benchmark program exhibited a 300MB reduction in memory consumption.

Expectations

PVAS is expected to be the basic technology for implementing future system software. Several international research collaboration projects are ongoing already. The PVAS technology will also be applied to big-data analysis and IoT platforms.

Reference

-Implementing Many-core Friendly MPI Intra-node Communication with New Task Model(Japanese) Authors: Akio Shimada, Atsushi Hori, Yutaka Ishikawa Journal: IPSJ Transactions on Advanced Computing System (ACS) Vol. 50

-Accelerating MPI Intra-node Communication Using Derived Data Types on Many-core(Japanese) Authors: Akio Shimada, Atsushi Sutoh, Atsushi Hori, Yutaka Ishikawa, Kenji Kono Journal: IPSJ Transactions on Advanced Computing System (ACS) Vol. 54

Contact

Atsushi Hori

Senior Researcher

System software Research Team

RIKEN Advanced Institute for Computational Science

TEL:03-5544-8543 FAX:03-5544-8541

E-mail:ahori[at]riken.jp

※Please change [at] to @